type

status

date

slug

summary

tags

category

icon

password

Introduction

Yao et al., 2022(opens in a new tab) introduced a framework named ReAct where LLMs are used to generate both reasoning traces and task-specific actions in an interleaved manner.

ReAct框架,用于LLMs以交错方式生成推理跟踪和特定于任务的操作

Results show that ReAct can outperform several state-of-the-art baselines on language and decision-making tasks. ReAct also leads to improved human interpretability and trustworthiness of LLMs. Overall, the authors found that best approach uses ReAct combined with chain-of-thought (CoT) that allows use of both internal knowledge and external information obtained during reasoning.

CoT与ReAct的行为类似,都是通过推理跟踪生成答案,但CoT缺乏与外部世界的接触或无法更新其知识可能导致事实幻觉和错误传播等问题。

Generating reasoning traces allow the model to induce, track, and update action plans, and even handle exceptions. The action step allows to interface with and gather information from external sources such as knowledge bases or environments.

处理异常挺重要的,Agent是不是可以根据程序的exceptions来调整Action呢?

How it works

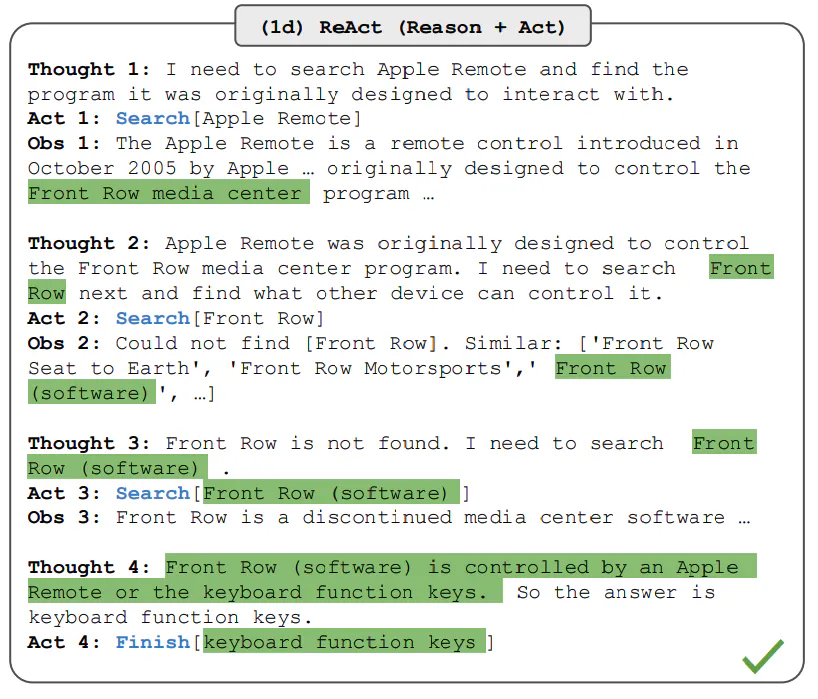

ReAct is a general paradigm that combines reasoning and acting with LLMs. ReAct prompts LLMs to generate verbal reasoning traces and actions for a task. This allows the system to perform dynamic reasoning to create, maintain, and adjust plans for acting while also enabling interaction to external environments (e.g., Wikipedia) to incorporate additional information into the reasoning. The figure below shows an example of ReAct and the different steps involved to perform question answering.

ReAct是reasoning➕acting,根据上一步的observation,产生新的Thought来,进行action。也就是说obs+thought可以构成reasoning,然后进行action.

ReAct Prompting ReAct

The first step is to select cases from a training set (e.g., HotPotQA) and compose ReAct-format trajectories. These are used as few-shot exemplars in the prompts. The trajectories(轨迹)consist of multiple thought-action-observation steps as shown in the figure above. The free-form thoughts are used to achieve different tasks such as decomposing questions, extracting information, performing commonsense/arithmetic reasoning, guide search formulation, and synthesizing final answer.

ReAct best practise(ReAct最佳实践的适用场景)

Knowledge-Intensive Tasks

The paper first evaluates ReAct on knowledge-intensive reasoning tasks such as question answering (HotPotQA) and fact verification (Fever(opens in a new tab)). PaLM-540B is used as the base model for prompting.

We can also observe that ReAct outperforms CoT on Fever and lags behind CoT on HotpotQA. A detailed error analysis is provided in the paper. In summary

我们还可以观察到,ReAct 在 Fever 上优于 CoT,在 HotpotQA 上落后于 CoT。本文提供了详细的误差分析。综上所述:

- CoT suffers from fact hallucination

CoT 患有事实幻觉

- ReAct's structural constraint reduces its flexibility in formulating reasoning steps

ReAct 的结构约束降低了其在制定推理步骤方面的灵活性

- ReAct depends a lot on the information it's retrieving; non-informative search results derails the model reasoning and leads to difficulty in recovering and reformulating thoughts

ReAct 很大程度上取决于它所检索的信息;没有信息性的搜索结果会破坏模型推理,并导致难以恢复和重新表述想法

Prompting methods that combine and support switching between ReAct and CoT+Self-Consistency generally outperform all the other prompting methods.

ReAct加CoT+自一致性的组合效果最好

Decision Making Tasks

The paper also reports results demonstrating ReAct's performance on decision making tasks. ReAct is evaluated on two benchmarks called ALFWorld(opens in a new tab) (text-based game) and WebShop(opens in a new tab) (online shopping website environment). Both involve complex environments that require reasoning to act and explore effectively.

针对这些任务,ReAct 提示的设计方式不同,同时仍然保持相同的核心思想,即结合推理和行动。下面是涉及 ReAct 提示的 ALFWorld (类似于密室解谜游戏)问题的示例

ReAct outperforms Act on both ALFWorld and Webshop. Act, without any thoughts, fails to correctly decompose goals into subgoals. Reasoning seems to be advantageous in ReAct for these types of tasks but current prompting-based methods are still far from the performance of expert humans on these tasks.

Loading...